SOC Analyst Pyramid

Introduction

Last weekend, I did a 10 minute fireside chat during lunch at BSidesSATX 2015 [1]. It was an informal presentation, where I discussed some of the issues facing security analysts working at an organization's Security Operations Center (SOC).

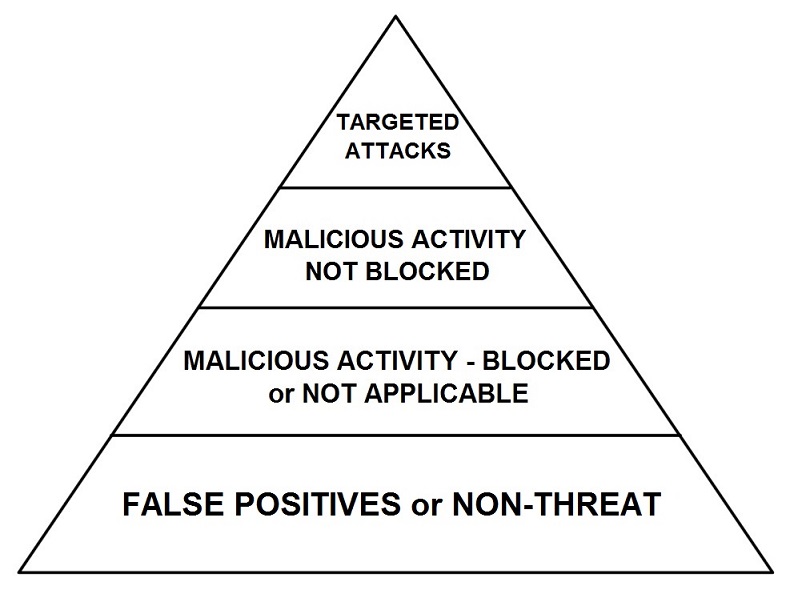

With only 10 minutes, the largest part of that presentation covered a "SOC analyst pyramid" of activity any organization will encounter.

For the presentation, security analysts are defined as people who monitor their organization's network for near-real-time detection of malicious activity. Security analysts review alerts from their organization's intrusion detection systems (IDS) or security information and event management (SIEM) appliances. These alerts are based on various sources, such as network traffic and event logs.

SOC Analyst Pyramid

Below is a visual representation of this pyramid:

As seen in the image above, the pyramid from top to bottom reads:

- Targeted attacks

- Malicious activity - not blocked

- Malicious activity - blocked or not applicable

- False positives or non-threat

Base of the SOC Analyst Pyramid

The base of the SOC analyst pyramid consists of false positives or valid activity unique to your organization's network. In my years as an analyst, investigating this activity took up the majority of my time. At times, you'll need to document why an alert triggers a false positive, so it can be filtered and allow the team to focus on real suspicious activity.

In my experience, no matter how well-tuned your security monitoring system is, analysts spend most of their time at this level of the pyramid.

Next Tier: Malicious Activity - Blocked or Not Applicable

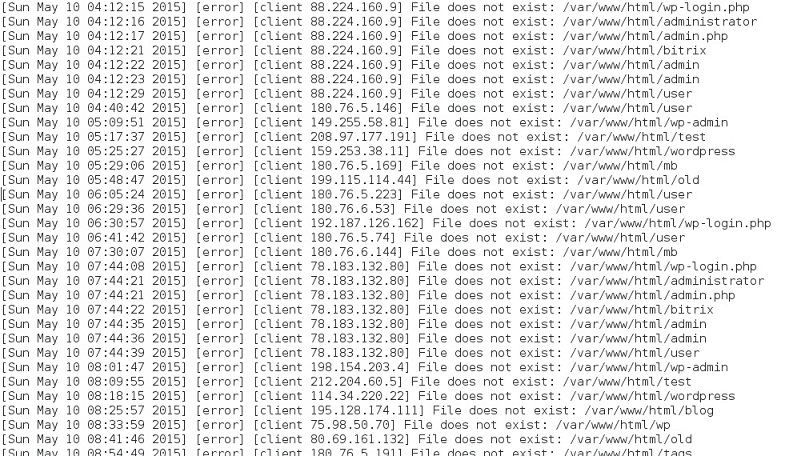

The next level involves malicious activity that's either blocked or not applicable. Blocked activity includes spam with malware attachments (malspam) blocked by your organization's spam filters. Non-applicable activity includes certain types of scanning. The intent is malicious, but the scans are blind and not applicable to the targeted host. For example, here's a short list of activity from the error logs of a server I run:

That server doesn't run WordPress, nor does it have any sort of web-based administrative login, but I'll find WordPress-based scans hitting the server's IP every day. That shows malicious intent, but it's not applicable.

SOC analysts worried about near-real-time detection of malicious activity generally don't spend much time with this tier of the pyramid.

Next Tier: Malicious Activity - Not Blocked

The next tier of the pyramid involves malicious activity that somehow makes it past your organization's various security measures. This level includes drive-by infections from an exploit kit after viewing a compromised website. Depending on your organization's policies, adware might be an issue. Resolving issues involving adware or potentially unwanted programs (PUP) might give SOC personnel practice for resolving hosts infected with actual malware. Just make sure analysts don't focus on the adware/PUP. The focus of a SOC should always be on malicious activity.

This level of the pyramid is where analysts develop their skill in recognizing malicious activity. Exploit kit traffic might not infect a user's computer. SOC personnel should be able to examine this sort of malicious traffic and determine if a host actually became infected. After an alert, I've seen too many people assume a host was infected without digging in deeper to see what actually happened.

Malware or compromised hosts found at this level of the pyramid are not targeted. This type of malicious activity is a concern for any organization. It's not limited to your employer.

Top of the Pyramid: Targeted Attacks

This tier is where a SOC proves its value to an organization. If bad actors, criminal groups, or hostile foreign agents gain a foothold in your organization's infrastructure, you might not be able to get rid of them. Detecting intrusions early and preventing these bad actors from further access is extremely important. Any number of sources will tell you data breaches are not a matter of "if" but "when" [2][3][4].

Targeted attacks include spear phishing attempts to gather login credentials from specific members. Personnel using a chat system for sales or support can also be targeted. Denial of Service (DoS) attacks or Distributed DoS (DDoS) attacks are usually at this tier. Watering hole attacks [5] are also an issue.

Final Words

I've been a SOC analyst for two employers: one was the government, and the other is private sector. In both cases, I believe the SOC analyst pyramid applies. Feel free to leave a comment, if you have any opinions on the matter.

---

Brad Duncan, Security Researcher at Rackspace

Blog: www.malware-traffic-analysis.net - Twitter: @malware_traffic

References:

[1] http://www.securitybsides.com/w/page/91978878/BSidesSATX_2015

[2] http://www.securityinfowatch.com/article/12052877/preparing-for-your-companys-inevitable-data-breach

[3] http://www.maslon.com/webfiles/Emails_2015/LegalAlerts/2015_LegalAlert_CyberSecurity_DataBreach_webversion.html

[4] http://www.hechtins.com/blog/data_breach--not_if_but_when.aspx

[5] http://www.trendmicro.com.au/vinfo/au/threat-encyclopedia/web-attack/137/watering-hole-101

Comments

Although I think analysts cover some more domains like APT etc so better rename malicious word.

Anonymous

May 11th 2015

1 decade ago

Anonymous

May 11th 2015

1 decade ago

We end up signing a customer and take over their un-tuned network and spend most of the time and cleaning up. Identifying actual attacks becomes like searching needle in a haystack. Also based on findings tuning is to be recommended. Sometimes the events gets missed and we all know what comes next.

I also like to have a check for baseline configurations when we signup new customers. Basic checks that identifies any violation of industry policies or standard for example outbound NTP traffic, use of default usernames etc...

I wanted to have this out as my GCIA Gold topic as a life as analyst in SOC. I am glad other big brains are thinking the same.

Anonymous

May 11th 2015

1 decade ago

600 minors, such as "needed a Band-Aid" leads to

30 accidents requiring treatment by trained personnel leads to

10 serious accidents requiring transport to a hospital leads to

1 fatal accident.

http://crsp-safety101.blogspot.com/2012/07/the-safety-triangle-explained.html

If the relationship is not maintained, it generally means that people are under-reporting something, usually because of disciplinary threats for working in an unsafe manner.

Anonymous

May 11th 2015

1 decade ago

Brent Eads, CISSP-ISSAP

A Fortune 100 Manufacturer

Anonymous

May 11th 2015

1 decade ago

Michael

@chief_m1ke

Anonymous

May 11th 2015

1 decade ago

One question: if SOC analysts do not spend time on the "malicious activity - blocked" tier, how does the SOC build intelligence surrounding the company's adversaries? Is that something that would be left to another team (not the SOC) or not something viewed as important at all?

Anonymous

May 11th 2015

1 decade ago

Threat intelligence from blocked activity is certainly important. Organizations with a mature security structure would not ignore information from that tier.

Anonymous

May 11th 2015

1 decade ago

A new plant manager calculated the numbers for all of the tiers and discovered the bottom one was about half of what it should have been but the other three were right on target except the fatal had not occurred. So the number of incidents was the same except people were under-reporting to get the $50. And there was peer pressure to not report because either everyone got the $50 or no one did.

He held three plant-wide meetings, one per shift. He introduced the safety triangle and the current numbers. He told everyone to look at the person to their left and then at the person to their right. Then he said that the numbers said at least one of the people who were just looked at should be dead and if things did not change for real, one of the people would die soon. It really got people's attention and then he changed the metric to a total reduction, not just the bottom tier. People got it and within some months they all got their $50 again but this time with real numbers. And no one died and the hospital transports decreased etc.

Anonymous

May 12th 2015

1 decade ago

In any case Brad, i'd like to hear about how an analyst progresses in their career.

Anonymous

May 13th 2015

1 decade ago