Targeted social engineering

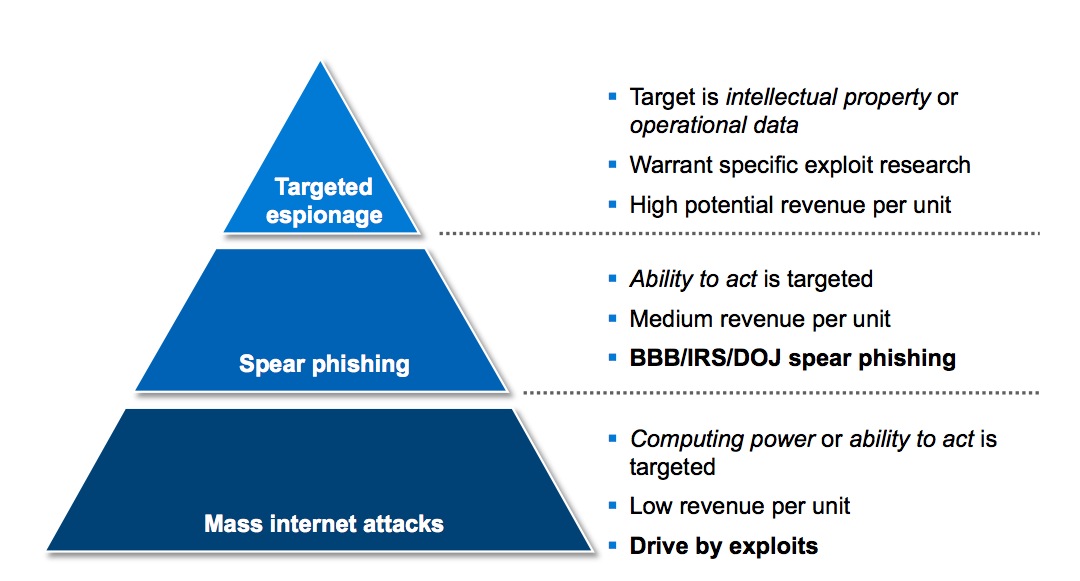

Here’s a somewhat dated and simplified graph of the three main attack "modus operandi" I generally distinguish:

There are many variants on each, but in general, mass attacks do not distinguish by target either through the exploit, vector and social engineering used. The exploit is customized to fit a large audience. In the case of spear phishing, the attack is customized to a smaller audience, such as CEO’s of a relatively wide set of organizations, or visitors of a specific web site. Targeted attacks are those in which only a single organization or just a few people are specifically targeted. In the latter case, both exploit and the social engineering are customized to almost the level of the individual. The cost of executing such an attack is relatively high, but the revenue per unit (= compromised user) is also much higher.

It may surprise you to hear that many targeted attacks do not involve exploiting software vulnerabilities at all. Attackers tend to make attacks just as complex as necessary for them to succeed. Less is more, when it comes to style. In many cases, that just means sending an executable file, or the equivalent of it, to a user. If the proxy doesn’t allow it, then they’ll send it as an encrypted zip archive, with the password in the mail.

The hidden aspect that makes the attack successful, is more often than not the social engineering. Let’s have a look at some modus operandi that have actually been used in the wild, and have proven wildly successful. I’ll try to include some example stories from the field.

Cognitive dissonance: Early 2006, a limited set of recipients received an e-mail message in Traditional Chinese, describing a major “loss of face” of an individual, for whom the red carpet was rolled out by the US government. The attachment to the e-mail message had the filename “HUJINTAO”, incidentally also the name of the Paramount Leader of the People’s Republic of China. When read by an individual who has a specific feeling about the president, this is likely to invoke a secondary feeling regarding his “face”, an important concept in many cultures. Such a “cognitive dissonance” creates a powerful tool of persuasion to try and resolve the issue. In this case, the only way the reader can make sure is by opening the document. This was a very powerful psychological attack – just three days before the message was sent out, Chinese President Hu Jintao had experienced several issues during a visit to Washington DC.

Mimicked writing styles: It’s clear that having a blog makes you a well known person. Less clear is that it also makes you a better understood person. It’s possible to deduce the way people treat each other by reading the communications they release to the world, making those other people a wee bit less safe online. In one incident, an attacker used phrases directly taken from a public blog, as well as a cordial greeting that the blogger had used when writing about a personal topic. This made the message significantly more authentic to the target, who duly clicked on the attachment.

Matching content to topics of interest: This probably makes most sense. What is of interest to the reader is more likely to generate clickthrough. However, making use of specific situations and thoroughly understanding the target’s needs is even more effective. During the Tibetan protests in early 2008, a US-based NGO that was actively working with Tibetans on getting video material from Lhasa to activist groups started receiving malicious videos which were trojaned.

Convincing users to forward messages: Most people have a limited circle of friends from whom they will trust any content. If an attacker is able to fingerprint this circle, for example through social networks, they can abuse this to make a message appear more trusted than it in fact is. In a real-life example, the attackers identified their target had a friend who was relatively less experienced in IT, and had publically stated so in a random online article. They spoofed a message from one of this individual’s friends, saying he was interested in applying for a job with the organization where their actual target worked. They sent the message to the target’s friend, and asked him to forward. The target’s friend forwarded the message and identified the applicant as a “trusted contact”. As a result, malicious content suddenly became very trusted.

Backdooring viral content: Everyone has once received a “funny” document in his e-mail. Pictures of dancing elephants are popular. Talking cats even more. This type of viral content often takes on a life of its own, it becomes a “meme”. A popular meme in Taiwan in 2007 was a document with pictures of smiling dogs. The document was distributed through forums, e-mail and instant messaging, and it quickly became “trusted” content. About three days into the meme, individuals at a single company started receiving the meme, only this time with backdoored content. Interestingly, the attackers did make one distinction: the malicious document had a single additional space at the end of the file.

The trusted news channel: About two months ago, a non-profit organization started receiving e-mails from a new Chinese news portal. While the site did not contain much content, the e-mails were insightful articles on Chinese affairs. The recipients were unaware where this content was coming from, but found it useful. As the mails did not contain links, slowly the messages became more and more trusted. Eight messages into the cycle, a link was included which pointed to a browser exploit.

There are plenty more techniques of interest, but this article would become far too long if we’d go into others as well. Defending against these attacks is not as obvious as patching a vulnerability. Several tools will need to be used and complemented by one another:

- Technical mitigation to prevent succesful completion of an attack to the highest degree that can cost-effectively be accomplished. This includes anti virus, the blocking of any non-necessary file type, and so on;

- Basic security awareness training for all employees that use IT resources. This should cover the minimum standards of IT behavior that are expected of your employees;

- A more thorough security awareness program for high-risk employees. This includes those employees with access to highly sensitive or important information, but also those who have a wide public presence. The awareness program for these employees should be focused on linking impact to realistic scenarios. An easy way to make employees interested in how to protect themselves is to present a scenario of an organization similar to yours being attacked using several of the above scenarios;

- High risk employees should receive regular updates on the risk profile of the organization and any new attack vectors that have been identified in this specific industry. Such information can often be obtained from your local neigborhood ISAC (Information Sharing and Analysis Center) or WARP (Warning, Advice and Reporting Point). It's important to keep these employees involved in the process.

Cheers,

Maarten Van Horenbeeck

Comments