Updates to Our Datafeeds/API

Most of the data we are collecting is freely available via our API. For quick documentation, see https://isc.sans.edu/api. One particular popular feed is our list of "Researcher IPs." These are IP addresses connected to commercial and academic projects that scan the internet. These scans can account for a large percentage of your unsolicited inbound activity. One use of this feed is to add "color to your logs" by enriching your log data from this feed.

Most of the data we are collecting is freely available via our API. For quick documentation, see https://isc.sans.edu/api. One particular popular feed is our list of "Researcher IPs." These are IP addresses connected to commercial and academic projects that scan the internet. These scans can account for a large percentage of your unsolicited inbound activity. One use of this feed is to add "color to your logs" by enriching your log data from this feed.

The simplest way to get the feed is via https://isc.sans.edu/api/threatcategory/research. By default, you will receive XML formatted data. But add "?json" to the URL for JSON or "?tab" for a tab-delimited plain text file. Other options are available (see the page I linked to above).

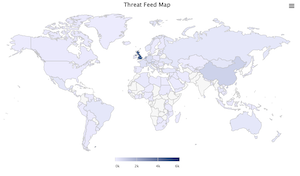

You may also explore the feeds at https://isc.sans.edu/threatmap.html

Currently, the feed lists almost 6,000 IP addresses. But many of them are from specific networks, and you may be able to aggregate them (and aggregated feed is coming...).

Another feed that we are experimenting with is a feed of newly registered domains. Sadly, not all top-level domains make the data available (in particular country domains often don't). You can retrieve this feed via

https://isc.sans.edu/recentdomains (you can also add a date to look for new domains from a specific day. For example, https://isc.sans.edu/api/recentdomains/2021-02-01 ). This feed is experimental, and performance may be slow at times.

Probably the best "enrichment" feed we have is our "Intelfeed." For each IP address, you will see labels associated with that IP address.

https://isc.sans.edu/api/intelfeed

I need to document the labels better (maybe later today. Watch http://isc.sans.edu/api for an update)

How to Use These Data Feeds Responsibly

First of all, be gentle on our servers. I recommend you pull the data once or twice a day. The data should not change more than once a day. Download the entire data and import it into your local database for lookups of individual IPs.

Please use a custom user agent that includes contact information. We do not require authentication to use any of the data (considering it for the future...), but we need to get a hold of you in case your requests cause a problem. Otherwise, you may find yourself blocked. We do block some standard python user agents for that reason (for people who do not read manuals :( ).

Do not use the data as a blocklist. The data is provided on a "best-effort" basis. It may, for example, include IP addresses of cloud proxies like Cloudflare, and you may block specific IPs. I don't want my phone to ring off the hook again because some federal agency decides to block IPs based on our data just ahead of a big filing deadline, and users cannot connect to their site.

You may use the data to protect your own network, commercial or not. But you may not resell it (don't resell something you didn't pay for :-) ).

---

Johannes B. Ullrich, Ph.D. , Dean of Research, SANS.edu

Twitter|

| Application Security: Securing Web Apps, APIs, and Microservices | Orlando | Mar 29th - Apr 3rd 2026 |

Comments