Running your Own Passive DNS Service

Passive DNS is not new but remains a very interesting component to have in your hunting arsenal. As defined by CIRCL, a passive DNS is "a database storing historical DNS records from various resources. The historical data is indexed, which makes it searchable for incident handlers, security analysts or researchers". There are plenty of existing passive DNS services: CIRCL[1], VirusTotal, RiskIQ, etc. I’m using them quite often but, sometimes, they simply don’t have any record for a domain or an IP address I'm interested in. If you’re working for a big organization or a juicy target (depending on your business), why not operate your own passive DNS? You’ll collect data from your network that will represent the traffic of your own users.

The first step is to collect your DNS data. You can (and it’s highy recommended) log all DNS queries performed by your hosts but there is a specific free tool that I use to collect passive DNS data: passivedns[2]. It's a network sniffer that collects all DNS answers and log them. So, not intrusive and low footprint. The tool is quite old but runs perfectly on recent Linux distros.

AFAIK, it is not available in classic repositories and you'll have to compile it. I recommend to activate the JSON output format (not enabled by default):

sansisc# ./configure --enable-json sansisc# make install

Note: You will probably need some dependencies (libjansson-dev, libldns-dev libpcap-dev)

Once compiled, run it with the following syntax:

sansisc# /usr/local/bin/passivedns -D -i eth1 -j -l /var/log/passivedns.json

Note: In the command above, I assume that you already get a mirror of your traffic on eth1. My passivedns process is running on my SecurityOnion instance.

A few seconds later, you should see interesting data stored in /var/log/passivedns.json:

{

"timestamp_s":1553714372,

"timestamp_ms":268056,

"client":"192.168.254.8”,

"server":"204.51.94.8”,

"class":"IN”,

"query":"isc.sans.org.”,

"type":"A”,

"answer":"204.51.94.153”,

"ttl":10,

"count”:1

}

The output has been reformated for more readibility. You have 1 JSON event per line in the log file.

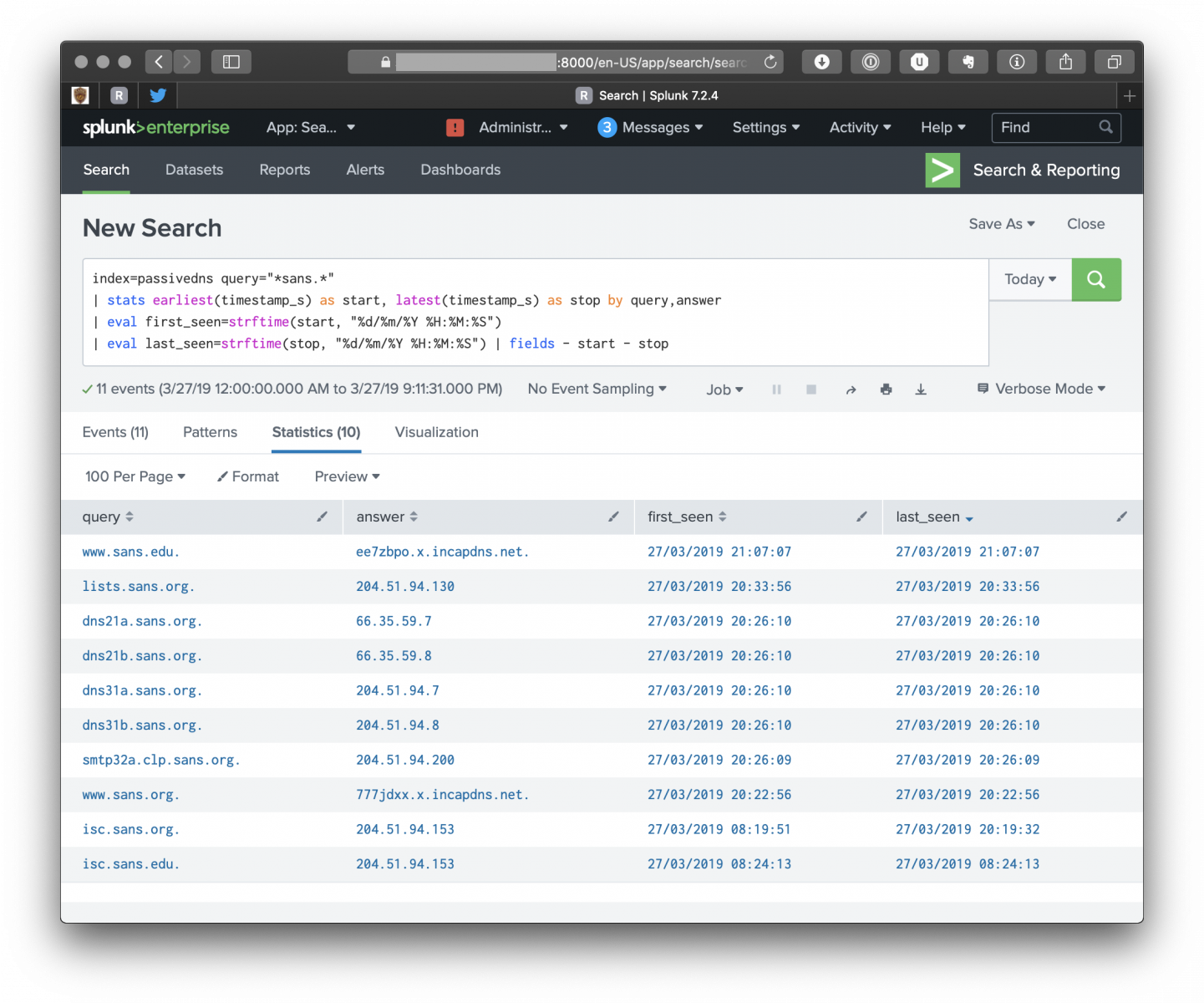

The next step is to process the file. passivedns comes with some scripts to store data in a local db and perform some queries. In my case, I prefer to index the log file in my Splunk (or ELK, or … name your preferred tool). Here is an Splunk query example to search queries containing “sans.:

index=passivedns query=“*sans.*" | stats earliest(timestamp_s) as start, latest(timestamp_s) as stop by query,answer | eval first_seen=strftime(start, "%d/%m/%Y %H:%M:%S") | eval last_seen=strftime(stop, "%d/%m/%Y %H:%M:%S") | fields - start - stop

Passive DNS is a nice alternative to the regular collection of DNS logs, if you can't have access to the DNS logs because your System Admin is not cooperative (yeah, this happens!). You can search your passive DNS data with malicious DNS from a threat intelligence tool like MISP. The following query will search for malicious domains extracted from MISP for the last month and which IP address performed the query. This query should NOT return any record otherwise, it's time to investigate.

index=passivedns [|mispgetioc last=30d category="Network activity" type="domain"| rename misp_domain as query | table query] | stats earliest(timestamp_s) as start, latest(timestamp_s) as stop by client, query | eval first_seen=strftime(start, "%d/%m/%Y %H:%M:%S") | eval last_seen=strftime(stop, "%d/%m/%Y %H:%M:%S") | fields - start - stop

Note: mispgetioc is a project available on GitHub that allow querying the MISP API from Splunk[3].

Not yet convinced? Do not hesitate to deploy such a tool in your networks. And you? Do you collect passive DNS data? Please share your ideas/comments!

[1] https://www.circl.lu/services/passive-dns/

[2] https://github.com/gamelinux/passivedns

[3] https://github.com/remg427/misp42splunk

Xavier Mertens (@xme)

Senior ISC Handler - Freelance Cyber Security Consultant

PGP Key

| Reverse-Engineering Malware: Advanced Code Analysis | Amsterdam | Mar 16th - Mar 20th 2026 |

Comments

Anonymous

Mar 28th 2019

6 years ago

https://www.minds.com/newsfeed/704852903688413192?referrer=linuxgeek

A few tidbits tho, if I were doing it again today I'd also put all the intel-feeds (compromised IPs, known malicious IPs or domains) as separate RPZ zones. Why? I started blocking web-ads/analytics sites that I found in the DNS logs just before a spyware/adware related query that led to an infection. Yeah, I know I'm bad cuz ads pay for content. But I got sick of sites that wanted to track my users' every move on the internet and wound up feeding infected ads with java/flash/silverlight exploits.

Anyway, I got to where I wanted to filter out these DNS queries while searching through the logs. I wanted to still block all the analytics/tracking related queries, but when I was visualizing the top X people hitting the DNS filters and what they were querying for, I didn't want to see web-ad and tracking junk, I wanted to see hits on the "compromised IP" DNS filters. :-)

Anonymous

Mar 29th 2019

6 years ago