Patching in 2 days? - "tell him he's dreaming"

With all the patching you have been doing lately I thought it would be opportune to have a look at what can and can't be done within two days. Why two days? Well quite a few standards want you to, I guess that is one reason, but the more compelling reason is that it takes less and less time for attacks to be weaponised in the modern world. We have over the past year or so seen vulnerabilities released and within hours vulnerable systems are being identified and in many cases exploited. That is probably a more compelling reasons than "the standard says to". Mind you to be fair the standard typically has it in there for that reason.

So why does patching instill such dread in many? It tends to be for a number of reasons, the main objections I come across are:

- It might break something

- It is internal therefore we're ok AKA "we have a firewall"

- Those systems are critical and we can't reboot them

- The vendor won't let us

It might break something

Yes it could, absolutely. Most vendors have pushed patched that despite their efforts to test prior to deployment will actually break something. However in reality the occurrences are low and where possible you should have pushed it to test systems prior to production implementation anyway, so ...

It is internal therefore we're ok AKA "we have a firewall"

This has to be one of my favourites. Many of us have M&M environments, hard on the outside and nice gooey soft on the inside. Which is exactly what attackers are looking for, it is one of the reasons why Phishing is so popular. You get your malware executed by someone on the inside. To me this is not really a reason. I will let you use this reason to prioritise patching, sure, but that is assuming you then go patch your internet facing or other critical devices first.

Those systems are critical and we can't reboot them

Er, ok. granted you all have systems that are critical to the organisation, but if standard management functions cannot be performed on the system, then that in itself should probably have been raised as a massive risk to the organisation. There are plenty of denial of service vulnerabilities that will cause a reboot. If an internet facing system can't be rebooted I suspect you might want to take a look at that on Monday. For internal systems, maybe it is time to segment them as much of the normal network as you possibly can to reduce the risk to those systems.

The vendor won't let us

Now it is easy for me to say get a different vendor, but that doesn't really help you much. I find that when you discuss exactly what you can or can't change the vendor will often be quite accommodating. In fact most of the time they may not even be able to articulate why you can't patch. I've had vendors state you couldn't patch the operating system, when all of their application was Java. Reliant on Java, sure, reliant on a Windows patch for IE, not so much. Depending on how important you are to them they may come to the party and start doing things sensibly.

If you still get no joy, then maybe it is time to move the system to a more secure network segment and limit the interaction between it and the rest of the environment, allowing work to continue, but reduce the attack platform.

So the two days, achievable?

Tricky but yes. You will need to have all your ducks lined up and have the right tools and processes in place.

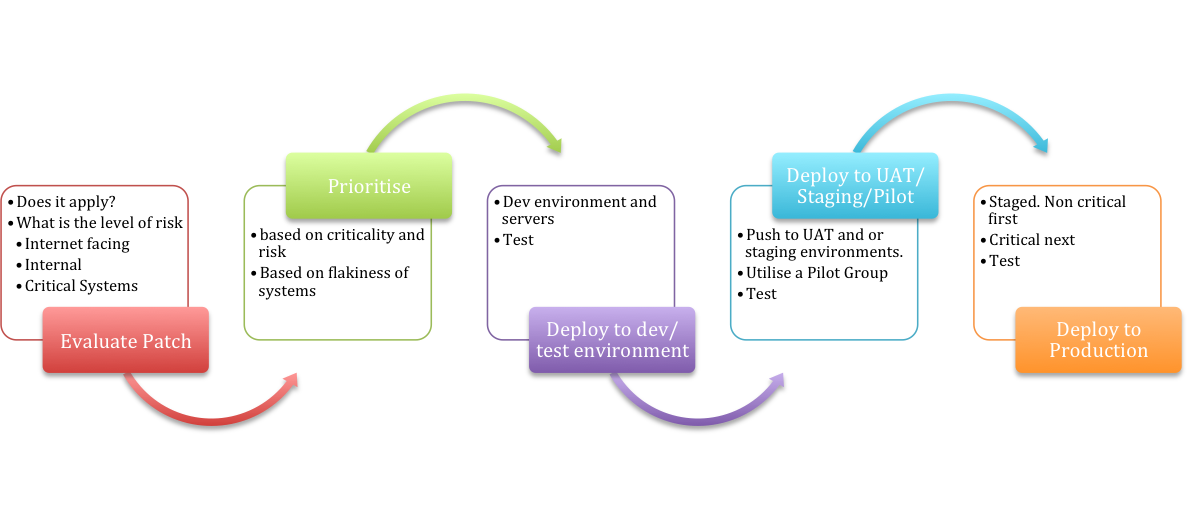

Lets have a look at the process first. Generally speaking the process will be pretty much the same for all of you. A high level process is below, I suspect it is familiar to most of you.

Evaluate the patch.

There are a number of different approaches that organisations take. Some organisations will just accept the vendor recommendation. If the vendor states the patch is critical then it gets applied, no questions asked. Well one, do we have the product/OS that needs to be patched? Yes, then patch.

Other organisations take a more granular approach, they may not be quite as flexible in applying all patches as they are released, or possibly rebooting systems is a challenge (we have all come across systems that when rebooted have a hard time coming back). In those organisations the patch needs to be evaluated. In those situations I like using the CVSS scores and apply any modifiers that are relevant to the site to get a more accurate picture of the risk to my environment (https://nvd.nist.gov/cvss.cfm). If you go down this path make sure you have some criteria in place for considering things critical. For example if your process states a CVSs score of 10 is critical. Scores of 9.9 or lower are High, medium low etc. I’d probably be querying the thoughts behind that. Pick what works for you, but keep in mind most auditors/reviewer will question the choice and you will need to be able to defend it.

Many patches address a number of CVEs. I generally pick the highest scoring one and evaluate it. If it drops below the critical score we have specified, but is close. I may evaluate a second one, but if the score remains in the critical range I won’t evaluate the rest of the CVEs. It is not like you can apply a patch that addresses multiple CVEs for only one.

Prioritise

You already know which servers, workstations and devices are critical to the organisation, if not that might be a good task for Monday, junior can probably do most of this. Based on the patch you will know what it patches and most vendors provide some information on the attack vector and the expected impact. Use this information to identify which types of servers/workstations/devices in your organisation should be at the top of the patching list. Are they internet facing machines? machines running a particular piece of software?

You might want to check our site as well. On patch Tuesday we publish patching information and break it down into server and client patches as well as priorities (PATCH NOW means exactly that). Based on these answers you may be able to reduce the scope of your patching and therefore make the 48 hours more achievable.

Deploy to Dev/Test environment

Once the patches are know, at least those that must be applied within the 48 hours, push them to your Dev/Test servers/workstations, assuming you have them. If you do not you might need to designate some machines and devices, non-critical of course, as your test machines. Deploy the patches as soon as you can, monitor for odd behaviour and have people test. Some organisations have scripted the testing and OPS teams can run these to ensure systems are still working. Others not quite that automated may need to have some assistance from the business to validate the patches did’t break anything.

Deploy to UAT/Staging/Pilot

Once the tests have been completed the patches can be pushed out to the next stage a UAT or staging environment. If you do not have this and you likely won’t have these for all your systems, maybe set up a pilot group that is representative of the servers/devices you have in the environment. In the case of workstations pick those that are representative of the various different user groups in the organisation. Again test that things are still functioning as they should. These test should be standardised where possible, quick and easy to execute and verify. Once confirmed working the production roll out can be scheduled.

Deploy to Production

Schedule the non critical servers that need patching first, just in case, but by now the patches have been applied to a number of machines, passed at least two sets of tests and prior to deployment to production you quickly checked the isc.sans.edu dairy entry to see if people have experienced issues. Our readers are pretty quick in identifying potential issues (make sure you submit a comment if you come across one). Ready to go.

Assuming that you have the information in place and the processes and have the resources to patch, you should be able to patch dev/test within the first 4 hours after receiving the patch information. Evaluating the information should not take long. Close to lunch time UAT/staging/Pilot groups can be patched (assuming testing is fine). Leave them overnight perhaps. Once confirmed there are no issues start patching production and schedule the reboots if needed for that evening.

Patched within 2 days, dreaming? nope, possible, tough, but possible.

For those of you that have patching solutions in place your risk level and effort needed is probably lower than others. By the time the patch has been released to you it has been packaged and some rudimentary testing has already been done by the vendor. The errors that blow up systems will likely already have been caught. For those of you that do not have patching solutions have a good look at what WSUS can do for you. With the third party add on it can also be used to patch third party products (http://wsuspackagepublisher.codeplex.com/) You may have to do some additional work to package it up which may slow you down, but it shouldn’t be to much.

It is always good to improve so if you have some hints, tips good practices, horrible disasters that you can share so others can avoid them, leave a comment.

Happy patching we'll be doing more over the next few weeks.

Mark H - Shearwater

Comments